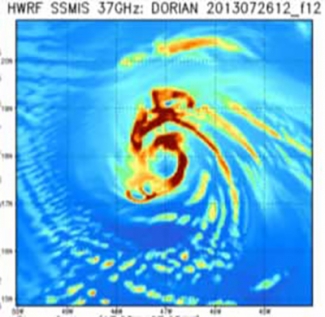

When a DTC pioneers’ plaque is eventually installed, Ligia’s name will be on it. She was involved with DTC activities even before it formally existed, assessing the feasibility of high resolution ensemble systems with Steve Koch. At the moment she is principally working on tropical storm forecast models as lead of the DTC Hurricane Task. For insider information about the new HWRF release and its assessment, she is the first call to make.

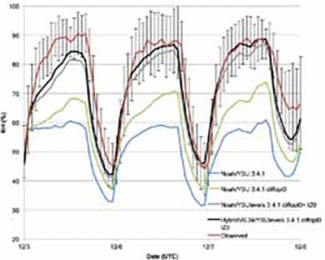

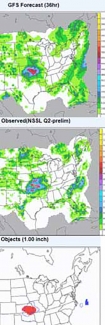

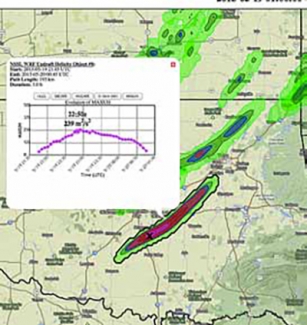

Ligia’s interest in meteorology and numerical modeling was first developed at the University of São Paulo, Brazil, where as an undergraduate she helped provide rainfall forecasts for the local television station and the state environmental agency. As she tells it, this was a trial by fire certainly, but also an eye-opening experience with the operational side of meteorology that stuck with her, and she credits these forecasts as preventing many deaths and injuries in urban regions where landslides pose a very serious risk. After PhD studies at Colorado State University and a subsequent postdoc in Boulder, she returned to Brazil and the operational arena for a 2-year stint on a tiger team that got a numerical modeling system going in the National Weather Service. Since her return to Boulder in 2003, she has worked at the DTC and at the Global Systems Division of ESRL on several numerical model, data assimilation, and forecast evaluation projects, including projects to choose the dynamic core of the Rapid Refresh model and to improve the air-sea fluxes in Hurricane WRF.

Ligia remembers Pedro Silva Dias, one of her undergraduate professors, saying that the relationship between research and operations, via R2O, should be a glass door with an equal dose of O2R, not a ‘valley of death’ as it can sometimes seem. After these early experiences with operations and then several twists and turns along research paths, she is often amazed to have come nearly full circle at the DTC.