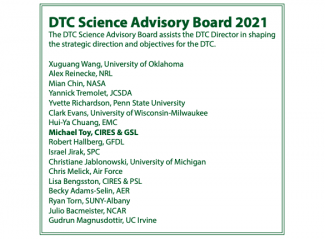

As I near the end of my 31+ year career with NOAA, this is a wonderful (and timely) opportunity to reflect on the last (almost) 6 years of model development and DTC engagement. The last time I provided my perspective for a Director’s Corner was during the Summer of 2018. At that time, I had recently joined NOAA’s National Weather Service (NWS) and was excited at the prospect of moving toward community-based model development to enhance NWS’ operational numerical weather prediction (NWP) systems. The DTC has played an essential role in that development community, and we would not be where we are today without their dedication, skill, and attention to model improvement. I also had the privilege of chairing the DTC’s Executive Committee, which gave me a chance to develop relationships with my colleagues at NOAA’s Global Systems Laboratory in the Office of Oceanic and Atmospheric Research (OAR), the US Air Force, and at NCAR.

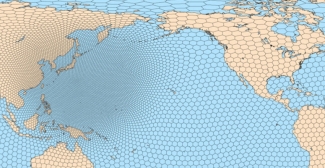

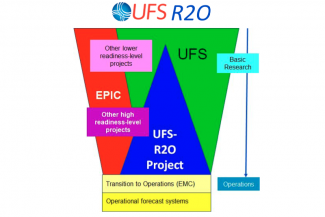

When I arrived, the DTC was already well on its way to supporting community collaboration through its numerous activities.They understood the challenge of creating a single modeling system (quite distinct from a single model!) that served the needs of both the research and operational communities. Leveraging their existing relationships with the model development community, the DTC focused on supporting the development of a UFS-based Convective-Allowing Model (CAM) out of the gate, as well as establishing a longer-term vision for support across all UFS applications. They also anticipated the need to use computing resources in the cloud, developing containers for a number of applications.

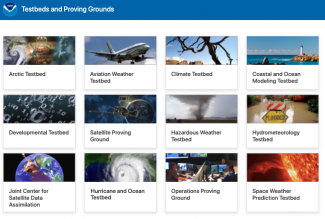

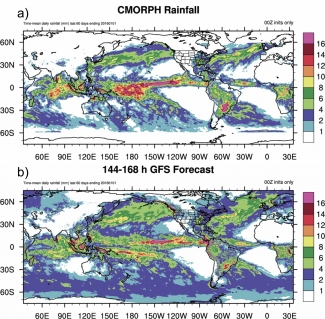

Over the last few years, the DTC has been able to shift its focus more (but not totally!) toward testing and evaluation. I think this is one of the most important elements in a successful community modeling enterprise. Perhaps the DTC activity I quote most frequently is the 2021 UFS Metrics Workshop. The DTC led the community in developing a set of agreed-to metrics across the UFS applications, verification datasets, and gaps. The community they engaged was large, including NOAA (both research labs and operational offices), NASA, NCAR, DoD, and DoE. To bring all of these organizations together was an immense achievement in my book, and the output from the workshop will be used for years to come.

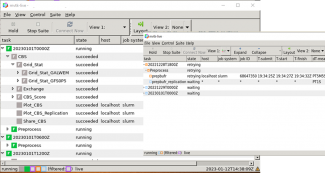

One important facet of unifying around a common set of metrics is finding a common way to calculate them when evaluating model output. The DTC has been a leader in the development and release of the METplus suite of evaluation tools. At EMC, we have based the EMC Verification System (EVS) on the DTC’s METplus work, producing the first ever unified system for quantifying performance across all of NOAA’s operational NWP systems, measuring the forecast accuracy of short-term, high-impact weather and ocean-wave forecasts to long-term climate forecasts. The information will help quantify model performance with much more data than previously available, in a single location.

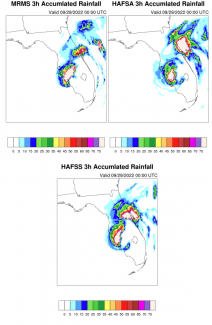

Atmospheric physics is at the heart of the performance of NWP systems and drives some of the most critical forecast parameters we deliver. The DTC develops, manages, and provides the Common Community Physics Package (CCPP) to simplify the application of a variety of physical parameterizations to NWP systems. The CCPP Framework, along with a library of physics packages that can be used within that framework, takes the concept and flexibility of framework based modeling systems and applies it to the milieu of possible physics algorithms. This has become an essential part of EMC’s development of operational NWP systems. Indeed, the first operational implementation of the CCPP in an operational environment was with the new UFS-based Hurricane and Analysis Forecast System, which became operational in June 2023.

I am extraordinarily grateful for my years-long association with the DTC. Their leadership in establishing a community-modeling paradigm with the UFS has been extraordinary, and the future of NWP across the enterprise looks quite bright because of what the DTC does. Thank you!