The DTC community mourns the passing of William “Bill” Lapenta, Ph.D. Bill was the Acting Director of NOAA’s Office of Weather and Air Quality (OWAQ) within NOAA’s Oceanic and Atmospheric Research that supports world-class weather and air quality research. He was also the guiding force and energy behind the Earth Prediction Innovation Center (EPIC) with the goal to launch the U.S. forward as the world leader in numerical weather prediction through public-academic-private partnerships. He was committed to conquering the Research to Operations divide. Bill’s connections to the DTC date back to its early days. While the Director of EMC, Bill served as a DTC Management Board member and eventually transitioned to serving as the lead for the DTC Executive Community when he became the NCEP Director.

Bill had already prepared his presentation on EPIC for the American Meteorological Society Annual Meeting in January in Boston. DaNa Carlis presented on his behalf, followed by remarks from Acting NOAA Administrator Neil Jacobs. In his presentation, Bill illustrated how public awareness of modeling was raised when the European model predicted Hurricane Sandy would make a hard left turn into the NE U.S. He shared EPIC’s goal to advance numerical guidance skill, reclaim and maintain international leadership in NWP and improve the research to operations transition process.

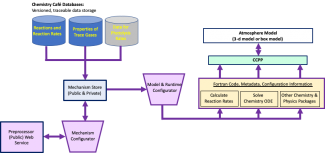

Bill then outlined how EPIC would fulfill this goal - by leveraging the weather enterprise and existing resources within NOAA, enabling scientists and engineers to effectively collaborate, strengthening NOAA’s ability to undertake research projects, and creating a community global weather research modeling system.

Bill knew it was important to establish strong partnerships with academia, the private sector, and other federal agencies that share common goals and values, and that open communication would connect leadership, programs, and scientists across organizational boundaries to deliver the best forecasts possible to America. Bill’s energy and leadership to bridge organizations inspires us to carry on with his EPIC vision.