The transition from current operational convection-allowing models (CAMs) to the Rapid-Refresh Forecast System (RRFS) involves a significant paradigm shift in CAM ensemble forecasting for the US research and operational communities. In particular, implementing a single-dycore and single-physics RRFS will allow a number of deterministic modeling systems to be retired, as well as the multi-dycore and multi-physics High-Resolution Ensemble Forecast (HREF) system. Such a change brings up a number of ensemble design questions that need to be addressed to create an RRFS ensemble that effectively addresses concerns about sufficient spread and forecast accuracy. Single-dycore and single-physics CAM ensembles are known to lack sufficient spread to account for all potential forecast solutions; therefore, a concerted research effort is necessary to evaluate potential options to address these concerns.

To this end, the DTC has been conducting two RRFS ensemble design assessments using both previous real-time data and retrospective, cold-start simulations in an operationally similar configuration. The first evaluation assessed time-lagging for the RRFS, with quantitative comparisons conducted between two RRFS-based ensembles run in real-time for the 2021 Hazardous Weather Testbed Spring Forecasting Experiment (HWT-SFE).

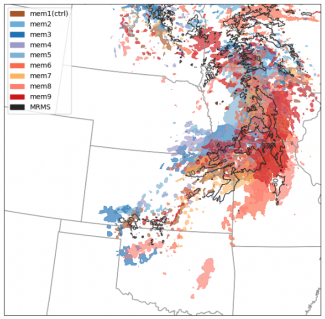

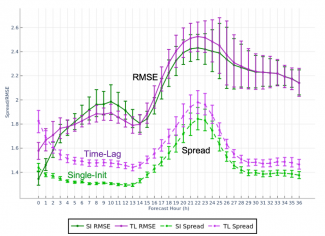

A nine-member time-lagged ensemble was created for a period of 13 days during the 2021 HWT-SFE by combining five members initialized at 00Z plus four members from the previous 12Z initializations. While the time-lagged members contain longer lead times, they still provide valuable predictive information, and so the 5+4 constructed time-lagged (TL) ensemble’s skill was compared with the full single-init (SI) ensemble, where all nine members came from the initialization at 00Z.

While the primary goal of a time-lagged ensemble is to derive additional predictive information from older initializations without the added cost of numerical integration, the results revealed that a set including TL members can contribute additional spread when compared to an SI ensemble of the same size, with little to no degradation in skill. This result was observed in most variables, atmospheric levels, and ensemble metrics, including evaluations of spread and accuracy, reliability, Brier score, and more. Almost all metrics exhibited a matched (and occasionally improved) level of skill and reliability between the TL and SI experiments, while many variables showed a statistically significant improvement to spread.

In preparation for the first implementation of the RRFS, results were communicated to model developers and management at NOAA/GSL and NOAA/EMC, the principle laboratories working on the system. Due to computational constraints, and informed by our findings, a decision was made to pursue the use of time-lagging as part of the ensemble design of RRFSv1.

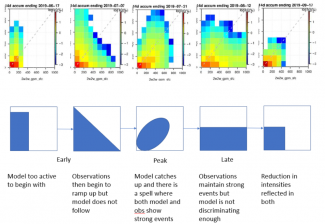

A second evaluation will explore the impact of initial condition (IC) uncertainty and stochastic physics in retrospective cold-start simulations. Employing initial condition perturbations from GEFS forecasts, these experiments are run with and without stochastic physics to isolate impacts of/investigate potential optimization for both uncertainty representation methods and their evolution as a function of forecast lead time. Given these results, in combination with the time-lagging experiments, we hope to help address the pressing need for operational systems to produce an adequate sample of potential outcomes while balancing the constraints of computational cost.